Accessible and Equitable Artificial Intelligence Systems

4. Guidance

Information

Table of contents

4.1 Accessible AI

AI systems and the processes, resources, services and tools used to plan, create, implement, maintain and monitor them need to be accessible to people with disabilities. People with disabilities and users of AI systems should be able to be active participants in all stakeholder roles in the AI lifecycle.

Without the possibility of participation of people with disabilities in all AI ecosystem roles, the perspectives of people with disabilities will be absent in essential decisions. Participation of people with disabilities in these roles will result in greater systemic accessibility and equity. Integrating the diverse perspectives of people with disabilities in these roles will also increase innovation, risk detection and risk avoidance.

4.1.1 People with disabilities as full participants in AI creation and deployment

People with disabilities should be able to participate fully as stakeholders in all roles in the AI lifecycle. These roles include:

- Planners

- Designers

- Developers

- Implementors

- Evaluators

- Refiners

- Consumers of AI systems and their components

To enable full participation in the AI lifecycle, the design and development tools, processes, and resources employed by an organization designing, developing, implementing, evaluating or refining AI should be accessible to people with disabilities.

People with disabilities should be able to participate as producers and creators in the AI lifecycle, not simply as consumers of AI products. Ensuring that all aspects of AI ecosystems are accessible will support the participation of people with disabilities in decision making related to the creation of AI. Accessible AI systems will also allow people with disabilities to benefit economically through employment opportunities related to AI.

To meet this guidance, start by:

- Using accessible tools and processes to design and develop AI systems and their components. Tools should meet the functional performance statements in section 4.2 of CAN/ASC - EN 301 549:2024 - Accessibility requirements for ICT products and services standard. Specifically:

- 5. Generic requirements

- 9. Web

- 10. Non-web documents

- 11. Software

- 12. Documentation and support services

- Using accessible tools and resources to implement AI systems where AI systems are deployed — including tools and resources used to customize pretrained models, train models and setup, maintain, and monitor AI systems. Tools should meet the functional performance statements in section 4.2 of CAN/ASC - EN 301 549:2024 - Accessibility requirements for ICT products and services standard. Specifically:

- 5. Generic requirements

- 9. Web

- 10. Non-web documents

- 11. Software

- 12. Documentation and support services

- Using accessible deployment tools and processes to assess, monitor, report issues, evaluate and improve AI systems. Tools should meet the functional performance statements in section 4.2 of CAN/ASC - EN 301 549:2024 - Accessibility requirements for ICT products and services standard. Specifically:

- 5. Generic requirements

- 9. Web

- 10. Non-web documents

- 11. Software

- 12. Documentation and support services

4.1.2 People with disabilities as users of AI systems

AI systems need to be accessible to people with disabilities. The use and integration of AI tools is becoming widespread. If these tools are not designed accessibly, people with disabilities will be excluded from using them, magnifying digital exclusion.

To meet the guidance, start by:

- Using AI systems with accessible user interfaces and interactions. User interfaces should meet the functional performance statements in section 4.2 of CAN/ASC - EN 301 549:2024 - Accessibility requirements for ICT products and services standard. Specifically:

- 5. Generic requirements

- 9. Web

- 10. Non-web documents

- 11. Software

- 12. Documentation and support services

- Providing accessible documentation to address disclosure, notice, transparency, explainability and contestability of AI systems, as well as their function, decision-making mechanisms, potential risks and implementation. Documentation should be kept up-to-date and reflect the current state of the AI being used. Information should meet the functional performance statements in section 4.2 of CAN/ASC - EN 301 549:2024 - Accessibility requirements for ICT products and services standard. Specifically:

- 5. Generic requirements

- 9. Web

- 10. Non-web documents

- 11. Software

- 12. Documentation and support services

- Providing accessible feedback mechanisms for individuals who use or are impacted by AI systems. Feedback mechanisms should meet the functional performance statements in section 4.2 of CAN/ASC - EN 301 549:2024 - Accessibility requirements for ICT products and services standard. Specifically:

- 5. Generic requirements

- 9. Web

- 10. Non-web documents

- 11. Software

- 12. Documentation and support services

- Providing alternative options when assistive technology that relies on AI is provided as accommodation and the data to be recognized or translated is outlying, causing compromised performance. For example, speech recognition systems will not work as well for people whose speech is very different from the training data.

4.2 Equitable AI

Where people with disabilities are the subjects of decisions made by AI systems or where AI systems are used to make decisions that have an impact on people with disabilities, those decisions and uses of AI systems should result in equitable treatment of people with disabilities.

People with disabilities, by virtue of their diversity, experience the extremes of both the opportunities and risks of AI systems. In addition to the specific equity risks experienced by other protected groups, such as a lack of representative data and the design of biased AI systems, people with disabilities are impacted by statistical discrimination.

4.2.1 Statistical discrimination

AI systems in development or use should be assessed and monitored to determine the performance and impact of decisions for the full range of standard deviation from the statistical average or target optima.

Even with full proportional representation in data, statistical discrimination will continue to affect people with disabilities because they are often minorities or diverge greatly from the statistical mean. The consequence of such discrimination is that an AI system’s predictions are wrong or exclude people with disabilities.

To meet this guidance, start by:

- Using impact assessments to investigate how statistical discrimination affects people with disabilities and document findings. These findings shall be publicly disclosed in an accessible format and be revisited and continuously monitored.

- Prioritizing assessment of risks for small minorities where risk assessment frameworks are employed to determine risks and benefits of AI systems. This includes prioritizing people with disabilities, who feel the greatest impact of harm.

- Not using risk assessments based solely on the risks and benefits to the majority, which would discriminate against minority groups.

- Using exploration algorithms that seek candidates who are different from current employees to increase the diversity of employees where AI systems are used in hiring. Doing so may reduce statistical discrimination.

- Using inverted metrics where social media is used to gather input to highlight novel and minority contributions as well as majority contributions.

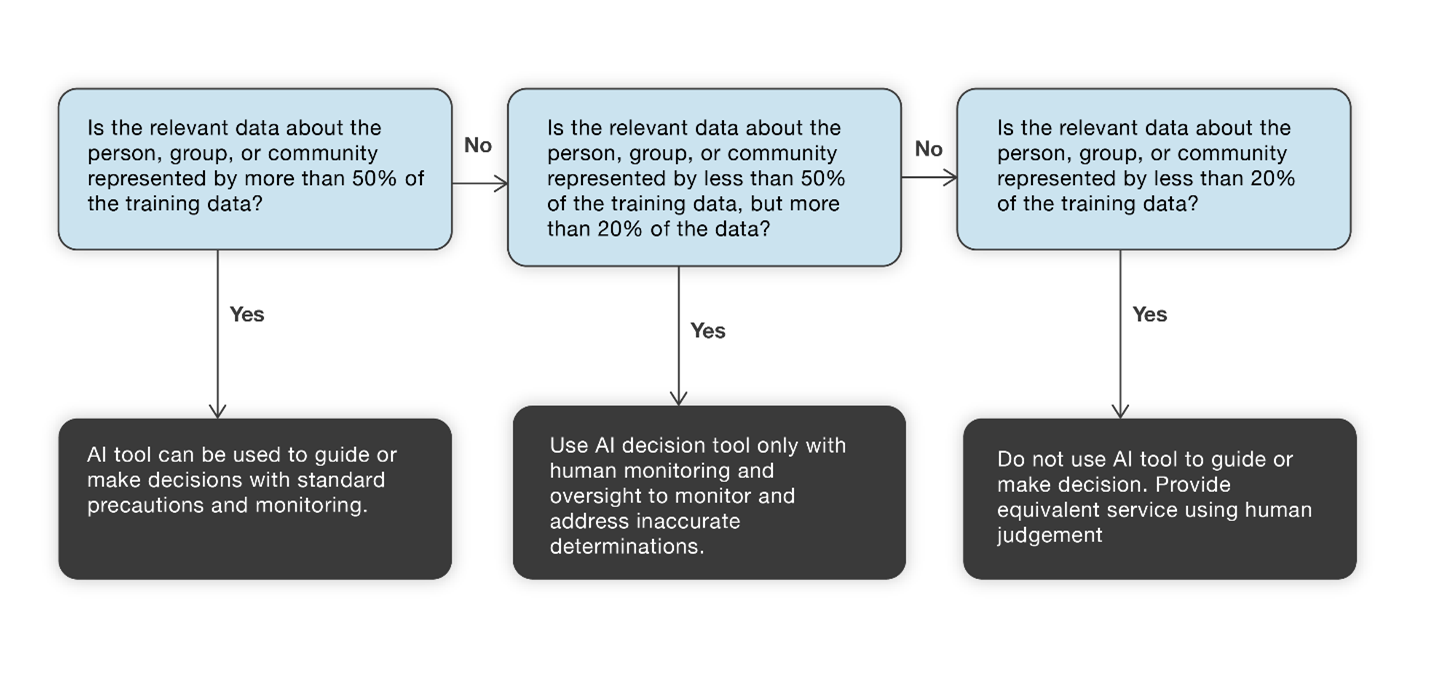

Figure 1. Implementation Phase: Determining Statistical Discrimination

Note: This step is in addition to ensuring that there is full and accurate proportional representation in the training data, and that the labels, proxies and algorithms do not contain negative bias. Statistical discrimination is the impact of the use of statistical reasoning on decisions regarding small minorities.

Example: AI tools deployed to select promising applicants for employment (especially in large competitive hiring scenarios), are trained to match data profiles of past successful employees. The algorithm is designed to select applicants that match the target successful employee data profile. People with disabilities are different from the average and will be screened out. When implementing AI, question the basis of decisions to ensure that the decision criteria do not discriminate against people who happen to be marginalized minorities in the data set.

4.2.2 Reliability, accuracy and trustworthiness

AI systems deployed by organizations should be designed to guard against the pursuit of accuracy that leads to falsely rejecting people with a disability due to either:

- over-trust in an otherwise accurate and reliable system, or

- ignoring failures as anecdotal and affecting only a small minority.

High accuracy is generally a good outcome, but accuracy is at odds with generalization, since the more accurate the system, the less it generalizes. This can unfairly exclude qualified individuals, including people with disabilities who are poorly represented in the model from the start. Pursuit of greater accuracy will only exacerbate this issue.

Over-trust in an AI system, to the point where people assume its outputs are always correct and become lenient when monitoring the system, often results in failures and incorrect predictions being missed by those monitoring the system. The lack of oversight can contribute to the harm experienced by people with disabilities, including when complaints are raised by people with disabilities. As the system is perceived as reliable, then the complaint is dismissed as anecdotal since it is rare and felt only by a small minority.

To meet this guidance, start by:

- Using accuracy measurements that include disaggregated accuracy results for people with disabilities. Accuracy measurements should also consider the context of use and the conditions relative to people with disabilities.

4.2.3 Freedom from negative bias

Organizations shall ensure that AI systems are not negatively biased against people with disabilities due to biases in the training data, data proxies used, data labels or algorithmic design.

The discriminatory biases of human designers and developers, digital disparities, or discriminatory or bigoted content in training data can all lead to negatively biased AI systems affecting people of different genders, races, ages, abilities and other marginalized minorities. AI systems can amplify, accelerate and automate these biases.

However, unlike a simpler variable like gender, race or age, “persons with a disability” is a category that aggregates people with disparate capabilities rather than a single measurable variable. While it is unlikely that negative biases against people with disabilities in AI systems can be completely eliminated, measures should be taken to remove common causes of bias.

To meet this guidance, start by:

- Using training data that represents a broad range of people with disabilities.

- Using a labelling process that engages people with disabilities to choose and curate data labels.

- Not using datasets that include data that stereotypes or discriminates against people with disabilities.

- Not using stereotypical proxy variables to stand in for people with disabilities or their data characteristics when training, testing and validating AI systems.

- Not using algorithms that are biased against people with disabilities when procuring, designing or tuning AI systems.

4.2.4 Equity of decisions and outcomes

Organizations deploying AI systems should monitor the outcomes of the decisions of AI systems with respect to equitable treatment of people with disabilities and use this data to tune and refine those systems.

AI decisions are often misaligned with the desired outcomes, so the information about the outcomes should be used to tune the decision system. This misalignment happens when there is a failure to take into account the complexity and changes within the context of the decisions being made. As people with disabilities often have complex, unpredictable lives with many entangled barriers, they are most impacted by this misalignment.

To meet this guidance, start by:

- Using systems to explain and monitor the determinants of decisions made by deployed AI systems and ensure that determinants are equitable to people with disabilities and reasonable with respect to the decisions being made.

4.2.5 Safety, security and protection from data abuse

Organizations should develop plans to protect people with disabilities in the case of data breaches, malicious attacks using AI systems, or false flagging as suspicious in security systems.

People with disabilities are the most vulnerable to data abuse and misuse, and they are frequently targeted by fraudulent claims and scams. This is especially the case for people with intellectual disabilities but also people relying on auditory information or other alternative access systems.

Privacy protections do not work for people who are highly unique, as they can be easily re-identified. People with disabilities are also frequently put into situations where they need to barter their privacy for essential services.

When AI is used in security efforts to flag security risks, anomalies that require financial audits, tax fraud, insurance risks or security threats, these systems disproportionally flag people with disabilities because they deviate from the recognized or average patterns.

To meet this guidance, start by:

- Creating plans that identify risks associated with disabilities, as well as clear and swift actions to protect people with disabilities.

- Assisting in recovering from data breaches, misuses and abuses.

- Using AI systems with caution and human oversight where AI systems flag individuals for investigation.

- Monitoring AI system decisions to ensure that people with disabilities are not disproportionately flagged for investigation.

4.2.6 Freedom from surveillance

Organizations should refrain from using AI tools to surveil employees and clients with disabilities.

AI productivity tools and AI tools that surveil employees, clients or customers use metrics that unfairly measure people with disabilities who may differ from the average in characteristics or behaviour. These tools are not only an invasion of privacy but also unfairly judge or assess people with disabilities.

To meet the guidance, start by:

- Not using AI systems for surveillance.

4.2.7 Freedom from discriminatory profiling

Organizations should refrain from using AI tools for biometric categorization (assigning people to specific categories based on their biometric data, such as facial or vocal features), emotion analysis or predictive policing of employees and clients with disabilities.

People with disabilities are disproportionately vulnerable to discriminatory profiling. Disability is often medicalized, and unfounded assumptions are made regarding statistical correlations.

To meet this guidance, start by:

- Not using AI systems for biometric categorization.

- Not using AI systems for emotion analysis.

- Not using AI systems for predictive policing.

4.2.8 Freedom from misinformation and manipulation

Organizations should ensure that AI systems do not repeat or distribute stereotypes or misinformation about people with disabilities and are not used to manipulate people with disabilities.

Systems intended to remove or prevent the distribution of discriminatory content can inadvertently censor people with disabilities or the discussion of sensitive topics by individuals attempting to address discrimination. Examples of such discrimination include:

- The exclusion of people with facial differences from social media sites.

- The replacement of relevant terms in captioning when discussing social justice issues.

To meet this guidance, start by:

- Engaging people with disabilities to determine data moderation criteria used in AI systems.

- Not censoring or excluding people with disabilities when toxicity monitors are employed, or prevent the discussion of social justice issues based on censored words.

4.2.9 Transparency, reproducibility and traceability

Where AI systems are designed, developed, procured and/or deployed by organizations, processes should be integrated to enable transparent disclosure of AI decisions and their impact.

Making AI systems transparent can help users understand how the system affects them, allowing them to raise meaningful commentary or recommend meaningful changes to the system. This is especially important when systems make decisions regarding people or a specific subset of people, such as people with disabilities, who already face barriers when accessing any kind of information.

To meet this guidance, start by:

- Using AI systems that generate reproducible results where possible, to support contestation.

- Documenting and making publicly available goals, definitions, design choices and assumptions regarding the development of an AI system where decisions made by AI systems are not reproducible.

4.2.10 Accountability

There should be a traceable chain of human responsibility, accountable to accessibility expertise, that makes it clear who is liable for decisions made by an AI system.

Who or what organization is responsible for the negative outcome of an AI system depends on the stage at which harmful impacts were introduced. Transparency and a traceable chain of human responsibility is imperative for determining responsibility.

With respect to people with disabilities, accessibility and disability knowledge and experience should be a factor in determining where the negative impact was introduced, why it is harmful to persons with a disability, and who is responsible for the harm.

To meet this guidance, start by:

- Establishing accountability of the training process.

- Assessing the breadth and diversity of training sources.

- Tracking provenance or source of training data.

- Verifying lack of stereotypical or discriminatory data sources.

- Ensuring the training and fine-tuning processes do not produce harmful results for people with disabilities.

4.2.11 Individual agency, informed consent and choice

Where an AI system will make unsupervised decisions by default, AI providers should offer a multi-level optionality (the ability for users to opt out, switch to another system, contest or correct the output) mechanism for users to request an equivalently full-featured and timely alternative decision-making process.

Unsupervised automated decision-making has associated risk. Impacted individuals are unable to avoid or opt out of the harms of statistical discrimination unless human-supervised and AI-free alternatives are provided. These alternative services need to offer an equivalent level of service, currency, detail and timeliness.

To meet this guidance, start by:

- Providing alternatives to AI-based decisions at the user’s choice. The alternative should either be performed without the use of AI or made using AI with human oversight and verification of the decision.

- Establishing reasonable service level standards for all levels of optionality, ensuring that the human service modes meet the same standards as those of the fully automated default.

- Not using disincentives that reduce individual freedom of choice to select human-supported or AI-free service modes. This requires alternative services to be sufficiently well-resourced, equivalent in functionality, and comparable to the unsupervised default.

4.2.12 Support of human control and oversight

AI systems should provide a mechanism for reporting, responding to, and remediating or redressing harms resulting from statistical discrimination by an AI system, which is managed by a well-resourced, skilled, integrated and responsive human oversight team.

It is imperative for people with disabilities to be able to identify, understand and address the harms resulting from AI decision-making. If reparation is needed, it requires direct engagement with communities and individuals with disabilities to mutually address harms or discriminatory decisions made by AI systems.

To meet this guidance, start by:

- Collecting and disclosing metrics about harm reports received and contestations of decisions. These reports shall adhere to privacy and consent guidelines.

- Integrating reports of harm, challenges and corrections to the decisions of an AI system into a privacy-protected feedback loop that is used to improve the results of the model.

- Using feedback loop with human oversight and consultation with disability community members to ensure that harms are sufficiently remediated with the AI model.

4.2.13 Cumulative harms

Organizations should have a process to assess impact from the perspective of people with disabilities and to prevent statistical discrimination that is caused by the aggregate effect of many cumulative harms that intersect or build up over time as the result of AI decisions that are otherwise classified as low or medium risk (for example, topic prioritization, feature selection or choice of media messaging).

Statistical discrimination affects risk management approaches, in that the risk-benefit determination is usually made statistically or from quantified data. This causes cumulative harms from the dismissal of many low-impact decisions all skewed against someone who is a small minority.

To meet this guidance, start by:

- Engaging people with disabilities to assess the cumulative impact of AI decisions.

- Going beyond the specific application when monitoring potential harm to consider all AI guidance or decisions that impact users of the AI application.

4.3 Organizational processes to support accessible and equitable AI

Where organizations deploy AI systems, organizational processes should support AI systems that are accessible and equitable to people with disabilities. Each process should include and engage people with disabilities in decision-making throughout the AI lifecycle. Processes should be accessible to people with disabilities as staff members, contractors, clients, disability organization members or members of the public.

The organizational processes include processes used to:

- Plan and justify the need for AI systems;

- Design, develop, procure and/or customize AI systems;

- Conduct continuous impact assessments and ethics oversight;

- Train users and operators;

- Provide transparency, accountability and consent mechanisms;

- Provide access to alternative approaches;

- Handle feedback, complaints, redress and appeals mechanisms; and

- Provide review, refinement and termination mechanisms.

AI systems are designed to favour the average and decide with the average. Given the speed of change in the domain of AI systems and the comparative lack of methods to address harms to people with disabilities, equity and accessibility must depend not only on testable design criteria, but also on the organizational processes in place. This clause lays out organizational processes that will foster greater accessibility and equity.

4.3.1 Plan and justify the use of AI systems

Where an organization is proposing and planning to deploy an AI system, the impact on people with disabilities should be considered, and people with disabilities who will be directly or indirectly affected by the AI system should be active participants in the decision-making process.

Regulatory frameworks differ in their approaches, including framing based upon risk, impact or harm. Risk frameworks can manifest statistical discrimination in determining the balance between risk and benefit to the detriment of the statistical minorities. Impact assessments do not take statistical discrimination or cumulative harm into account. Harm-based frameworks depend upon reports of harms after they have occurred.

Many risks are not measurable by an organization and require the engagement of individuals and other organizations that are most impacted by the risks.

To meet this guidance, start by:

- Using impact assessments based on the broadest range of people with disabilities as possible.

- Engaging people with disabilities that face the greatest barriers in accessing an existing function in the decision-making process, where the AI system is intended to replace or augment an existing function.

- Publicly disclosing intentions to consider an AI system in an accessible format.

- Distributing disclosure of intent to consider an AI system to national disability organizations and other interested parties.

- Establishing a process that allows organizations to be notified.

- Providing accessible methods for input when intent to consider an AI system is disclosed.

4.3.2 Design, develop, procure, and/or customize AI systems that are accessible and equitable

Organizations are already obligated to consider accessibility and consult with people with disabilities when procuring systems. However, AI systems require a more careful and informed engagement in complex choices by people impacted by those choices.

To meet this guidance, start by:

- Including accessibility requirements that meet clauses 3.1 and 3.2 in design, procurement, re-training and customization criteria.

- Seeking input from disability organizations in decisions related to designing, developing, procuring and/or customizing AI systems.

- Engaging people with disabilities and disability organizations to test AI systems prior to their deployment.

- Fairly compensating people with disabilities and disability organizations that are engaged in testing AI.

- Verifying conformance to accessibility and equity criteria by a third party with expertise in accessibility and disability equity before a procurement decision of an AI system is finalized.

4.3.3 Conduct ongoing impact assessments, ethics oversight and monitoring of potential harms

Existing regulations and proposed regulations focus on high risk or high impact decisions. Given the cumulative harm experienced by people with disabilities, monitoring systems should also consider harms from low and medium impact AI systems. Systems that are considered low or medium impact for the general population may become high impact for people with disabilities.

To meet this guidance, start by:

- Maintaining a public registry of harms, contested decisions, reported barriers to access and reports of inequitable treatment of people with disabilities.

- Establishing and maintaining a publicly accessible monitoring system to track the cumulative impact of low, medium and high impact decisions on people with disabilities.

- Establishing thresholds for unacceptable levels of risk and harm with national disability organizations and organizations with expertise in accessibility and disability equity.

4.3.4 Train personnel in accessible and equitable AI

All personnel responsible for any aspects of the AI life-cycle should receive training in accessible and disability equitable AI.

Harm and risk prevention or detection requires awareness and vigilance by all personnel. AI deployment is often used to replace human labour, reducing the number of humans monitoring and detecting issues. This means that all personnel involved should be trained to understand the impact of AI systems on people with disabilities and ways to prevent harm.

To meet this guidance, start by:

- Training all personnel in accessible and equitable AI in accordance with clause 4.4.2.

- Updating training regularly.

- Including harm and risk detection strategies in training.

4.3.5 Provide transparency, accountability and consent mechanism

Accessible mechanisms that support informed consent by users, to be the subject of AI system decisions, should be provided to people with disabilities who are directly or indirectly impacted by an AI system.

Accessible mechanisms and transparency around AI decision-making is crucial for minimizing harm to people with disabilities, whether for low, medium or high-risk AI systems.

To meet this guidance, start by:

- Providing accessible information about training data, including what data was used to pre-train an AI, customize or dynamically train an AI system.

- Providing accessible information about data labels and proxy data used in training.

- Providing accessible information about AI decisions and the determinants of those decisions.

- Providing accessible contact information of those accountable for AI systems and resultant decisions.

- Providing all information in a non-technical, plain language form, such that the potential impact of the decisions is clear.

- Allowing people to withdraw consent at any time without negative consequences.

4.3.6 Provide access to equivalent alternative approaches

Organizations that deploy AI systems should provide people with disabilities with alternative options that offer equivalent availability, reasonable timeliness, cost and convenience.

Human supervision and necessary expertise is critical for making AI systems equitable and accessible for people with disabilities. Retaining individuals with this expertise will also avoid human skill atrophy.

To meet this guidance, start by:

- Providing an option to request human decisions made by people with knowledge, experience and expertise in the needs of people with disabilities.

- Providing an option to request human supervision or for the final determination of decisions to be made by a person with expertise in the needs of people with disabilities, guided by the AI.

- Retaining individuals with the expertise necessary to make equitable human decisions regarding people with disabilities when AI systems are deployed to replace decisions previously made by humans.

4.3.7 Provide feedback, complaints, redress and appeal mechanisms

Organizations should provide feedback, complaints, redress and appeal mechanisms for people with disabilities.

Without an easy and accessible way for people with disabilities to provide feedback about AI systems and their decisions, organizations cannot accurately track the impact on people with disabilities. Taking these steps makes AI systems and their decisions more transparent, holds organizations accountable, and allows both organizations and the AI models to learn and improve based on documented harms.

To meet this guidance, start by:

- Providing easy to find, accessible and actionable feedback, complaints, redress and appeal mechanisms.

- Acknowledging receipt and provide response to feedback and incidents in no more than 24 hours.

- Providing a timeline for addressing feedback and incidents.

- Offering a procedure for people with disabilities or their representatives to provide feedback on decisions anonymously.

- Communicating the status of addressing feedback to people with disabilities or their representatives and offer opportunities to appeal or contest the proposed remediation.

4.3.8 Review, refinement, halting and termination

Organizations that deploy AI systems should continuously review, refine, and if necessary, halt or terminate AI systems.

Evaluation of AI system performance, and actions taken in response to the evaluation, should consider the impact on people with disabilities, even if these harms affect a relatively small number of people. This evaluation should be ongoing as performance can degrade and decisions can be skewed. People with disabilities are often the first to feel the effects of problems.

To meet this guidance, start by:

- Reviewing and refining AI systems regularly.

- Terminating the use of AI when necessary, such as in situations where the system malfunctions or equity criteria for people with disabilities degrade or are no longer met.

- Considering the full range of harms, including cumulative harms from low and medium impact decisions to people with disabilities.

4.4 Accessible education and training

Education and training should support the creation of accessible and equitable AI. This includes education and training in AI being accessible to people with disabilities, the coverage of content related to accessibility and equity within AI training, and the training of AI models.

Ensuring that education and training in the field of AI is accessible enables the participation of people with disabilities in all phases of the AI lifecycle. Incorporating principles of accessibility and equity in AI education and training fosters the creation of more accessible AI systems and tools. Continuously training and refining AI systems based on the risks to people with disabilities as well as contestations and requests for exceptions promotes AI that is more equitable to people with disabilities in the long run.

4.4.1 Training and education in AI

Training and education in AI should be accessible to people with disabilities.

People with disabilities should have the opportunity to participate in the development and design of AI systems with an understanding of those systems. Doing so will minimize the harms experienced by people with disabilities due to their direct involvement in the development of AI systems.

To meet this guidance, start by:

- Ensuring that training and education programs and materials are accessible.

4.4.2 Training and education in accessible and equitable AI

Where organizations provide training and education in AI, training and education in AI should include instruction on accessible and equitable AI.

Education and training in the field of AI should include information about creating accessible and equitable AI systems so that emerging systems and tools are designed inclusively.

To meet this guidance, start by:

- Integrating content on accessible and equitable AI in training and education.

- Including inclusive co-design methods with people with disabilities in training and education.

4.4.3 Training of AI models

Insights derived from contestation, appeals, risk reports and requests for exceptions should be used to refine AI systems while following strict privacy protection practices.

AI systems should be managed to learn from mistakes and failures so that these harms will not be repeated. This requires training using data regarding the range of outcomes from AI decisions, including data that highlights impacts on people with disabilities.

To meet this guidance, start by:

- Documenting contestation, appeals, risk reports and requests for exceptions.

- Continuously refining AI systems based on or using data from contestations, appeals, risk reports, and requests for exceptions.